Hand gestures dataset

This corpora contains three datasets created to train and evaluate hand gesture detection methods: 1) a dataset containing real images, 2) a synthetic dataset, and 3) a dataset containing descriptions of scenes. The gestures included in the corpora images are the following: point, drag, loupe, pinch, other gestures (for example, thumb-up or palm) and none (images without hands). These datasets were labeled indicating both the category and the position of the gestures. For the position, the coordinates were annotated using bounding boxes for: 1) the position of the hand within the image, 2) the position of the fingertips (the index fingertip for the point class and the index and middle fingertips for the drag class), and 3) the coordinates and labels of the objects pointed to for the class point.

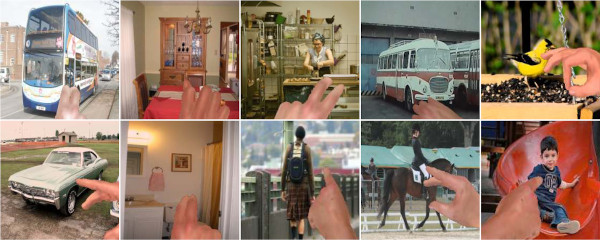

The samples of the dataset with real images were extracted from videos obtained with mobile phones. In order to ensure that the data generated were varied, different phone cameras were used to record indoor and outdoor scenes, with varied backgrounds and under different lighting conditions. In addition to images of the four proposed gestures, samples of backgrounds without gestures and of gestures other than those proposed were also extracted from these videos. Note that in the case of the dynamic pinch gesture, an average of 10 consecutive frames was extracted for each gesture in order to evaluate the movement of the fingers and their position. A total of 12,064 frames were extracted from the original videos, trying to balance the number of samples selected for each class. The following figure shows some examples of the images included in this dataset.

A synthetic dataset was also elaborated in order to improve the results obtained and help the training process. This dataset was created using a modified version of the LibHand tool, an open-source library for the rendering of human hand poses. We modified this library so as to enable the definition of gestures through a set of rules with the ranges of movement allowed for the finger joints, thus enabling variations of these gestures to be generated randomly within these ranges. This tool was used in order to automatically generate and label a dataset with a total of 19,200 images (3,200 of each gesture), with random variations in position, in the shape of the gestures, in the color of the skin, including random blur to emulate the motion effect, and with different backgrounds (using random images from Flickr8k and Visual Object Classes (VOC) datasets). The following figure shows some examples of the gestures generated.

An additional dataset of images with associated descriptions was also generated to evaluate captioning methods. For this, we used the Flickr8k dataset, which contains 8,000 images manually selected from the Flickr website with five descriptions of each image. Besides, we added a subset of 4,000 images from our dataset of real images, which also included 5 descriptions per image (this allowed the proposed system to adapt to our type of data, i.e., images of gestures taken with mobile phones). This subset includes both loupe and point gesture images (2,000 for each). The point gesture was included in order to increase the variability, and also make it possible to use the captioning head for these gestures (which could be appropriate for some applications).

The original resolution used for videos and images was 1,920 x 1,080 pixels. However, after conducting a series of initial performance and accuracy experiments at different resolutions, and also motivated by the restrictions of some of the methods evaluated, we decided to scale the images to a spacial resolution of 224x224 pixels. The following table shows a summary of the three datasets elaborated, including the number of samples per class in each dataset.

| Gesture | # Synthetic samples | # Real samples | # Captioning samples | Description |

|---|---|---|---|---|

| Point | 3,200 | 2,000 | 2,000 | Images of pointing gestures. |

| Drag | 3,200 | 2,000 | – | Images including drag gestures. |

| Loupe | 3,200 | 2,000 | 2,000 | Samples including loupe gestures. |

| Pinch | 3,200 | 2,064 | – | Sequences of dynamic pinch gestures. |

| Other | 3,200 | 2,000 | – | Images of gestures other than the four defined. |

| None | 3,200 | 2,000 | 8,000 | Samples in which no hand appears. |

| Total | 19,200 | 12,064 | 12,000 | Grand total: 43,264 |

RELATED PUBLICATION (CITATION)

Please, if you use these datasets or part of them, cite the following publication:

@inproceedings{Alashhab2022,

author = {Samer Alashhab and Antonio Javier Gallego and Miguel Ángel Lozano},

title = {Efficient gesture recognition for the assistance of visually impaired people using multi-head neural networks},

journal = {Engineering Applications of Artificial Intelligence},

volume = {114},

pages = {105188},

year = {2022},

issn = {0952-1976},

doi = {https://doi.org/10.1016/j.engappai.2022.105188},

url = {https://www.sciencedirect.com/science/article/pii/S0952197622002871}

}

DOWNLOAD

To download this dataset use the following link:

The files of the dataset are organized as follows:

- Real:

- Annotations (XML file with the annotations for the gestures: drag, loupe, other, point and scale)

- Images (JPG format)

- Synthetic:

- Without augmentation (hand gestures with transparent background):

- Annotations (TXT file with the position of the gesture and the tip of the index finger).

- Images (PNG format)

-

With augmentation (images from the "Without augmentation" folder including assorted backgrounds):

- Annotations (TXT file with the position of the gesture and the tip of the index finger).

- Images (PNG format)

- Without augmentation (hand gestures with transparent background):